Noods, Deep And Otherwise

Twenty years ago I blogged about a site that had faked-up celebrity women with photoshopped jizz all over their faces. I ended the post with this prognostication disguised as a query:

How long until you can beam a mugshot of your cutest co-worker from your phone cam to your DVD player, which will cheerfully paste her facial features onto the lithe body of Vivid’s latest superstar porn model?

Futurism is always a curious mix of oh-my-god-nailed-it and hilarious failure. In 2024 we still have phone cams, but DVD players are getting rare. Vivid Entertainment hasn’t released a new movie since about 2018, and superstar porn models are also a vanishing breed. But technology to give us porn that features our latest crush object, with or without their consent? That, twenty years later, we most definitely have. Whether we (socially, culturally, individually) want it, or not.

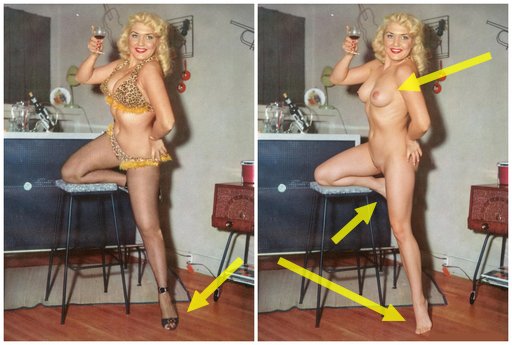

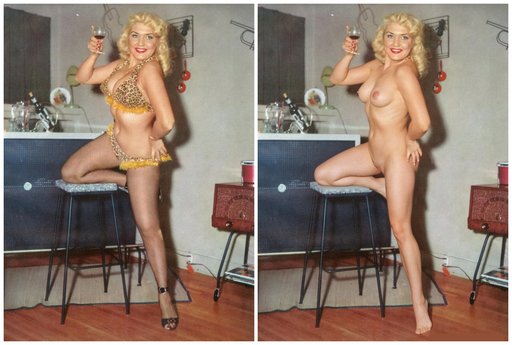

Here’s my existence proof. On the left, we have a 1957 photograph of cabaret dancer (high class stripper) Jenny Lee, aka “The Bazoom Girl”. Three clicks later, on the right, we have a very convincing image of Jenny without her dance costume, courtesy of an AI filter offered by the for-pay (if you have cryptocurrency) service DeepNoods:

I’ll have more — much more — to say about DeepNoods in a moment. But first let’s look more closely at what the service has done. (Click on the above image for the full resolution side-by-side.) What was my user experience, and what do we think of the modified image?

User experience first: After setting up an email-verified login, it’s literally just three clicks to process a photo. Hit the upload button, select a photo, hit the “reveal” button, wait two minutes, done. No parameters, no controls, no settings, no muss, no fuss. Just upload and go.

As for the image: I’ve studied it closely, and I only have three minor complaints. Look at the areas I’ve indicated with yellow arrows:

- The biggest flaw, by far, is that the AI got confused by her right foot where it was partially obscured by her left thigh. The bit of half-shod toes visible in shadows in the original image was removed entirely, and something subtly important has gone wrong with her ankle in the altered image, leaving the impression that she’s trying but failing to hide a club foot from the photographer.

- In the original image, Jenny artfully turned out her left foot in that subtle way that dancers and pinup models have. The AI did its “revert to the mean” magic and turned her digitally-unshod foot back to the right, into a more natural pose that’s presumably better-represented in its training database of nudes.

- That same reversion to the mean was cruelly unkind to Jenny’s generous bosom. Not to put too fine a point on it: the “bazoom girl” got robbed by the AI, which provisioned her with digital tits that fall sadly short of the 42Ds she advertised in her performing heyday. Indeed, her digital curves in general are smaller and more muscular than her actual ones were. This may be in line with 21st-century tastes, but for a nostalgic curmudgeon like me, it’s not ideal.

All of my nitpicks aside, the effectiveness and ease of use of this software/service is astonishing. These are the fraudulent x-ray spectacles of comic book fame, made real (or at least less fraudulent) through the magic of software. I’ve known for twenty years that this day was coming, and I’ve known for a year that this particular photomanipulation was possible with the image generation and manipulation tools we’ve come to call by terms such as “AI” and “generative art”. But I’ve been thinking of it as a technology with substantial barriers to entry, such as technical skill and access to software and the creative cleverness to avoid the pornocalypse filters that are baked into all commercially-respectable AI tools. DeepNoods has rubbed my nose in an unsettling fact: the barriers are gone. Any fool can do all this now.

So let’s talk about the ethics of it all. Make no mistake: this is software that can hurt people. As the name advertises, it is a deepfake generator. Deepfakes, in the succinct language of Wikipedia, “have garnered widespread attention for their potential use in creating child sexual abuse material, celebrity pornographic videos, revenge porn, fake news, hoaxes, bullying, and financial fraud.” The pornocalypse filters I’ve bitched about already exist for a reason, and the reason is that publicly traded companies and financiers with public reputations have to grapple with the pernicious deepfake projects listed on Wikipedia and somehow prevent the worst abuses of these capable image manipulation tools. It’s arguably among the biggest business problems that these so-called AI companies have.

The proprietors of DeepNoods have gone another way. They have chosen to remain carefully anonymous vis-a-vis their customers, and their web page makes no claims or representations about who they are or where you could find them. After processing your first image (which is free) at DeepNoods, the next one costs a dollar (presented as a 50% discount off a $2.00 list price). The “buy credits” button dumps you without explanation onto a sparse third-party page that demands a telephone number “for verification” in order to “complete your crypto purchase”. (That’s as far as I explored, since I don’t have a telephone number I’m willing to provide to an untrusted site presenting itself as a crypto exchange.) We are left to assume that DeepNoods proprietors have chosen to avoid the potentially-messy reputational, legal, moral, and financial consequences of any misuse of their tool by being, if not beyond reproach, at least beyond being found or forced to endure remonstrance.

Yesterday, when I processed the Jenny Lee image for this post, using the single free promotional credit found in my account at first login, the DeepNoods site had neither a privacy policy nor any terms of service. Today it has links to both; and the TOS do contains words of prohibition with regard to “offensive, harmful, or illegal content.” But terms of service have no binding force outside the law of contract, and you can’t contract with an anonymous party. Which is to say: the terms of service are empty words, and thus I shan’t bother analyzing them further.

It’s probably also worth noting that the altered demonstration image DeepNoods chose to display on their homepage began as a widely circulated image of celebrity musician Billie Eilish.

So much for the service-provider side of the ethics problem. What of the users?

First of all, let’s talk about me, here at ErosBlog. I was not paid to write this post; it is not promotional in any way. I am not endorsing DeepNoods nor any other deepfake tool or service; I am not making any general claims about the ethics of using such tools. The ethics of using this kind of software are not different in kind than we have been grappling with since the invention of Photoshop, or the airbrush, or the sharp knife in the darkroom wielded by Stalin’s propagandists. The only thing that’s different about AI-enabled generative deep-fakery is the lower barriers to entry. It’s fuckin’ easy now.

It’s true that I have said a lot in the past about the ethics of altering images. I’ve posted about photoshopped cum on celebrity faces, the asshole who puts fake digital “whore” tattoos on beach nudes, the infamous Jesus buttsexed by Roman soldiers ‘shop, the construction of a naked quadriplegic, and even my own fumbling use of generative art tools to create topless depictions of Sophia Loren, albeit ones that inhabit the uncanny valley. That last generated some mild backlash, as well as some thoughtful questions; and prompted me to dig in to the ethics (as I see them) in some — but far from sufficient — detail.

The shortest summary of my views is that the technology used to create an image — any image — has no particular ethical relevance. The ethical inquiry is always a balance: what potential for harm does this image have, and what are the benefits of creating and publishing it? Who suffers the harms, and who reaps the benefits? Are the harms big enough to worry about? Do they outweigh the benefits?

To one degree or another, I’ve had to grapple with these questions every time I’ve published an image on this blog. I’m 100% certain that some of my choices — some of my attempts to balance the harms and benefits — have been wrongly made. To test today’s deepfake service, I deliberately chose the image of an adult entertainer who has been dead for thirty years, knowing that she’s far beyond the reach of my ability to harm her. I’m comfortable with that choice. Some of you may not be. If you want to tell me how you feel, the comment section is open for any civil remarks. The ethics of erotic imagery in general, and of AI image manipulation in specific, are endlessly interesting to me. Let me know what you think!

Similar Sex Blogging:

- Jumping Through Pornocalypse Hoops: Generative AI Art

- Generative Art: Sophia Loren Topless

- The Pornocalypse Comes For Your Geese

- Sticky Jessica Alba

- Wookie Nookie

- Magic X-Ray Nudity-Revealing Lens

- Making Bubble Porn

- Photoshopped Helplessness

- You WISH There Was An App For That

- Photoshopping Megan Fox

- To Make A Harem Girl

- Sarah Palin Nude? Sorry, Not Yet.

- Marilyn Monroe's Naked Memory

- Ohnoes, Playboy Ate Her Belly Button

- Hillary Looking Sexy

- Constipation At The Muzzle Of An Airbrush

- How To Lie With Photoshop

- Teambuilding Exercises For Ladies, Circa 1910

Shorter URL for sharing: https://www.erosblog.com/?p=32541

Very impressive, indeed, Bacchus. As there is only one image that can be processed for free, I’ll take the time to choose mine very carefully. Raquel Welch, maybe…

In the UK, as of a few days ago, the ethics are now moot. The UK has passed legislation making non consensual nude imagery illegal, whether taken with a camera or created by generative AI. Even for private use. The penalties become unlimited when you publish said material.

The key word here is consent. I’d suggest that if you have consent then generative AI becomes redundant in most cases. In fact I’m sure most models would prefer you to take their nude in person, and control it rather than upload an image to a website and lose control of the source image and the outputs. To my mind there’s also something about taking a nude picture that creates a connection with the subject, where they participate in the process and the image is all the better for it. Especially since the images above, as you point out are not of the subject nude, but of a melange of nudity mapped onto her imperfectly to create an impression of her nudity.

That’s not to say as a teenager I didn’t fantasise about the clothed bits of ladies, and I certainly would have taken advantage as a teenage boy of the tools available now. But, that I think is because I was never explicitly taught about this kind of thing.

I knew as a teenager that penetrative sex required consent. I knew that a stolen kiss or caress would probably get a slap. This was probably a function of society at the time, my own upbringing and my education. Looking back I would definitely have liked my school years to include ethics in society, rather more than in religion. I made mistakes at uni, and learnt rapidly, and developed an ethical code, but I could have caused a lot more harm had the tools existed at the time. I think we need to teach children about ethics and try to create a society where the idea of using generative AI to unclothe our peers without consent sits uncomfortably.

I dislike censorship or legislation. The former is subjective and always wrong, the latter seldom conveys intent well and does not evolve but often becomes abused. I prefer education.

More widely and potentially controversially I think we should teach our children that pointing a camera at someone requires their consent. Always. Naked or clothed. We should not assume the right to take images of someone without consent. GDPR goes most of the way there but allows for personal use exception. The addition in the UK removes personal use for nudity. We should also practise what we preach. If we want the next generation to respect each other we should think about the example we set as well.

Which sounds all very moralistic. I shall drop in at this point that I don’t consider consensual pornography something we should hide from children, rather I think we should stop fussing about it and remove the allure and just label it, not censor it. The sex education I had certainly was technical not practical, and I was capable and interested long before I learned the useful bits. I was certainly never explicitly taught about consent short of penetration, and I didn’t really formalise it in my head till many years later reading things like this blog and talking to people in the fetish community.

Another side note, I do sometimes enjoy non consensual scenarios, but only if there is consent and safety. E.g. safe words, and mutual agreement a priori to boundaries.

If we can need consent for a nude image, then Generative AI only becomes useful when a consenting subject cannot be photographed nude. In the case above, Jenny Lee sadly cannot give consent, but looking at her cv and writings, I suspect consent could be inferred. Certainly as she is deceased the law becomes less protective. Ethically I think this use is borderline but ok, but I prefer the original image, not because it is clothed, but because it is the image the model and photographer intended to present. If she did nudes herself, and wikipedia states she did, I bet they’ve got more va-va-voom than the generative AI image presents, though a quick search didn’t find any.

Bubble porn is more innocent and funny anyway. Why use an AI to fill in the missing parts when your brain is so good at doing the same?

http://www.eros...porn/

I’m definitely on board with the idea that since the tools are here, are are not going away again, that we have to instruct people, especially children, in thinking before they use them, and that includes building a better moral code.

Not easy, but laws can’t stop irresponsible people.

[…] Via ErosBlog. […]

I SERIOUSLY doubt Ms. Lee shaved the pubes in 1957.

Your doubt is noted.

Although it was rare in that time, it was by no means unheard of, especially among dancers who wore (and sometimes removed) skimpy costumes.

But of course, all of the parts covered by her costume in the original photo have been filled in by an averaging algorithm trained on a database of modern porn photos, in which pubic hair is nearly nonexistent. That’s why her nude tits are smaller than the larger-than-average-in-any-age ones under her top, too. This dumb algo has no idea if Jenny Lee shaved her pussy, any more than you or I do. It just slapped in a plausible average pornstar pussy after much algorithmic slicing and dicing.