Pornocalypse Comes For Your Keyword Searches

Thursday, September 10th, 2015 -- by Bacchus

#Pornocalypse. It comes for us all, yadda yadda. But what is it, really?

When I first started talking about #pornocalypse, I had a very specific observation to share about the corporate/financial life cycle of internet companies. In the typical cases, internet ventures are adult-friendly when their service is new, and so they enjoy a robust pulse of early traffic from people playing with porn on their platform. Then, as a service/company grows, there comes an irresistible financial pressure to “sanitize” the product, kicking off all the porn so that nobody in corporate management has to confront their own hypocrisy or the squeamishness of financial counterparties when the company seeks to go public, get acquired, or raise additional capital.

Although I first used #pornocalypse to talk about the distinctive pulse of porn bans and adult-industry user purges that we see at the “cashing in” stage of an internet venture’s corporate life cycle, I’ve come to realize that that’s not the whole story. For instance, it’s growing more common for companies to plan their “cashing in” phase from day one, so they may ban all the porn ab initio, giving up the helpful porn influence on the early growth phase in exchange for less hassle during the cashing-out phase. Nowadays it’s harder to tie porn-hostile corporate behavior to the formerly-notorious moment when prudish investment bankers would start looking aghast at all the porny traffic. So I’ve come to use #pornocalypse more loosely, as a handy shorthand for any porn-hostile moves by internet companies.

All that’s by way of preface. This post is about a particular pornocalyptic dodge that we’re seeing more frequently in recent years. Along with content deletions and user bans, a growing trend is to fuck with discovery. The porn is there — and the terms of service may even allow it to stay there — but the search and discovery tools won’t show it to anybody. Welcome to invisibility, you porny motherfuckers.

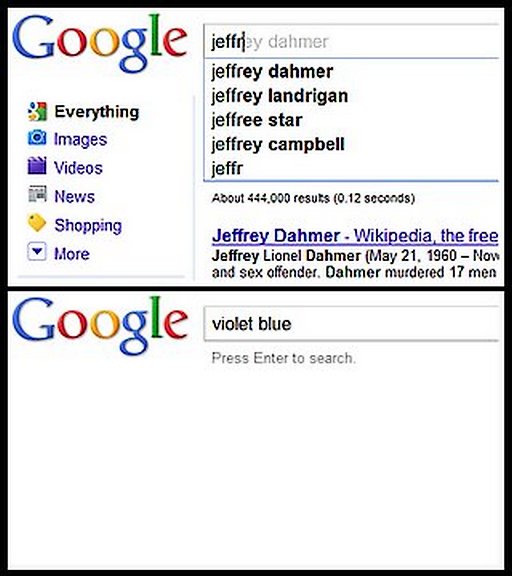

For a early example, consider this post from 2011, in which I visually documented how Google’s then-new(ish) autocomplete service (sometimes called Google Instant) considered Violet Blue too porny to suggest when suggesting searches on the fly:

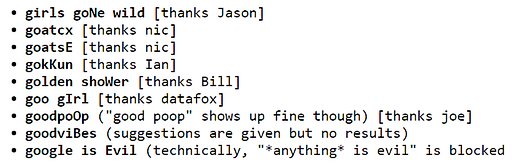

I was unaware when I posted that back in 2011 that 2600.com had already sussed out and published a long list of the keywords that Google Instant was blocking. It turns out that Violet was in astonishingly good company! Here are the names of actual humans I was able to identify on the list:

- Betty Dodson

- Bianca Beauchamp

- Carol Queen

- Courtney Trouble

- Ducky Doolittle

- Ginger Lynne (probably typo for Ginger Lynn)

- Jackie Strano

- Jenna Jameson

- Jesse Jane

- Jordan Capri

- Linda Lovelace

- Madison Young

- Miki Sawguchi

- Nina Hartley

- Pamela Anderson

- Paris Hilton

- Sadie Lune

- Shanna Katz

- Shar Rednour

- Shauna Grant

- Shay Lauren (typo for Shay Laren?)

- Traci Lords

- Violet Blue

How did Violet Blue get on this Google blue list? Well, it’s not clear; but it might become clearer if you take out all of the porn performers and the pop-culture celebrities. Ogle this shorter list:

- Betty Dodson

- Carol Queen

- Ducky Doolittle

- Jackie Strano

- Sadie Lune

- Shanna Katz

- Shar Rednour

- Violet Blue

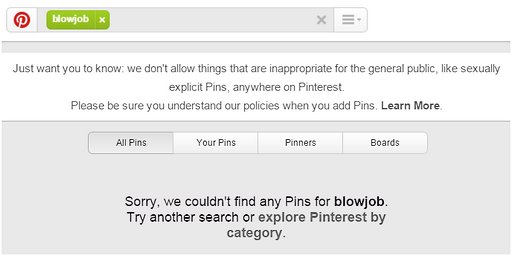

I don’t know everything about all of the people on that list, but I see a lot of sex educators, and I know that at least three of them (four, with Violet) have been in the past or remain to this day closely associated with Good Vibrations, the famous female-friendly San Francisco sex store with the educational mission at goodvibes.com. Hey, do you suppose Good Vibrations was also on the ban list? Well, duh; as surely as bears shit in woods, there they are:

My theory: at some point, an anonymous blue-list technician decided to give Good Vibrations and its whole crew the naughty words treatment. Why? I doubt we’ll ever know. But it may have been well before Google acquired and implemented this particular blue list; after all, Violet was gone from Good Vibrations after 2006. (The short selection of megafamous porn performers also seemed curiously dated even in 2010, suggesting again that the list may have been in circulation for a long time before Google got it.)

By now we understand that there’s a long-established ecosystem of blue list sharing among tech companies and blue-list technicians. More evidence: in 2012 the CEO of Shutterstock (a stock photography site) posted to GitHub a list of 342 “dirty, naughty, obscene, and otherwise bad words” that starred Violet’s name as the only person on the blue list. Although by then the vintage porn stars and Violet’s compatriots in the Good Vibrations San Francisco sex mafia had been scrubbed, the list shares clear ancestry with the Google list as exposed by 2600.com. Consider the persistence of “leather straight jacket” on both lists. Not only is this an oddly specific item for a naughty words list, it’s doubly erroneous; the item in question is most often written as “straitjacket” (one word, no “g”). How likely is it that “leather straight jacket” got put on both lists without those lists having a common source?

The only conclusion is that blue-list engineers have been passing around and sharing their blue lists for a long time, and likely were were already doing so back when Google grabbed and implemented the list with Violet’s name on it. This process continues; the Shutterstock list at GitHub has been forked 112 times since 2012, which emphasizes to me that tech companies continue to seek, modify, and implement these blue lists in their products, sharing their efforts as they go.

Unfortunately for prudish tech companies, no such blue lists long survive contact with the enemy. (That would be us.) In the age of the hashtag, users get very creative about tagging the adult goodies they want to share and see. Thus did Instagram, which is famously porn-hostile, came in for a lot of ridicule this summer when its Long Guerrilla War On Porn got featured on Talking Points Memo:

But as many porn hashtags as there are, many more have been quietly erased by Instagram, revealing nothing when you search for them. Pop in #sex and you’re told “No posts found.” Ditto #adult, #stripper, #vagina, #penis, #cleavage. Even the Internet’s ultimate innuendo, the eggplant, wasn’t safe. You can still tag your posts with banned hashtags and emojis, but good luck finding your community within. Typo-laden tags have popped up to accommodate these arbitrary bans: #boobs is gone, but as I write this, #boobss has well over 600,000 posts; #adult’s spinoff #adule is quickly closing in on 100,000. The tag for #seduce may now be useless, but variants like #seduced and #seductivsaturday cropped up in its place–though it’s worth noting that in the weeks since I’ve been writing this article, #seductiv, the tag that brought me into this world to begin with, has vanished entirely, as has #boobss, #adule, and #eggplantparm, after BuzzFeed caught wind of the fact that the eggplant emoji was not searchable on the app. The goalposts on these hashtags have moved considerably: In 2012, Huffington Post reporter Bianca Bosker wrote about Instagram’s early porn community, but back then, the banned hashtags were far more intuitive: #instaporn, for instance, or #fuckme.

Some more light was shed on Instagram’s evolving war on hashtags after they caught a ton of flak from the body-positive community for banning #curvy from their search results. Of course they claimed it was an automated mistake, and later unblocked the #curvy hashtag after giving it an intensive human-driven curatorial scrubbing:

After a week of controversy, Instagram is unblocking the #curvy hashtag, effective Thursday afternoon.

Instagram first prevented users from searching for photos with the term last week, prompting a huge backlash from users and women’s advocacy groups who were outraged to see a term normally associated with body-positive messages removed from the site. A spate of replacement hashtags, including “curvee,” “bringcurvyback” sprang up to fill in the gap.

The problem was that the #curvy hashtag was being used for other reasons, said Nicky Jackson Colaco, Instagram’s director of public policy. Namely, pornography.

And the tag was overrun, she said. Instagram has protocols in place to flag when any term is being consistently associated with content that breaks the company’s terms of service. Jackson Colaco said that Instagram removes several tags every day when analysis from the company’s automated and human content filtering systems get reports from users that they’ve become a problem. And at some point last week, #curvy hit the tipping point.

…

As Instagram moves to restore the hashtag, it’s also taken the time to find new tools to help it better parse through the photos that its 300 million users post to the site every day. That means stepping up curation of the hashtag, particularly on sections of the service that highlight the “top posts” and “most recent” posts using the marker to make sure that no one looking at #curvy pictures gets an obscene surprise.

The discovery pornocalypse is also highly visible at Tumblr. Do they seriously think anybody will believe this negative search result?

Now, in Tumblr’s case, there’s a workaround. They’ll let you turn off the filtering. But study that page. Click the graphic for the full-sized version. Do you see a “These results are filtered” link that you can click to turn off the filter? You will look in vain for that. If you were sufficiently credulous, you might even come to believe that there’s no anal sex on Tumblr at all. That would seem to be the impression Tumblr wants to convey to the naive searcher. If you’re willing to believe that, Tumblr is delighted to let you believe it.

But what if you’re not quite that stupid? What if you’re looking at that screen and mumbling “Fuckers! I know you’ve got some anal in here somewhere! What do I have to do to see it?”

Well, look closely. Look double closely. Somewhere on that page there’s an icon, nine pixels wide by twelve pixels high. If you can find those 108 pixels, and and if you can guess what they mean, causing you to click upon them, then (and only then) Tumblr’s anal floodgates will open for you. Good luck!

Similar Sex Blogging:

- Why Can't You Search For Eggplants On Instagram? #Pornocalypse!

- Twitter #Pornocalypse? "A Bug" – Says Twitter

- #Pornocalypse Comes to Twitter

- Doing Business In The Shadow Of The Pornocalypse

- Google Buries The Blowjobs

- Tumblr To Bury Adult Blogs Even Deeper, Begins Vilifying Them

- Tumblr Admits, Then Denies, It's Hiding Porn

- The Pornocalypse Comes For Us All